Tutorial - Introduction

Overview

Our tutorials are divided into categories roughly based on model modality, the type of data to be processed or generated.

Text (LLM)

|

|

|

|

text-generation-webui

|

Interact with a local AI assistant by running a LLM with oobabooga's text-generaton-webui

|

|

Ollama

|

Get started effortlessly deploying GGUF models for chat and web UI

|

|

llamaspeak

|

Talk live with Llama using Riva ASR/TTS, and chat about images with Llava!

|

|

NanoLLM

|

Optimized inferencing library for LLMs, multimodal agents, and speech.

|

|

Small LLM (SLM)

|

Deploy Small Language Models (SLM) with reduced memory usage and higher throughput.

|

|

API Examples

|

Learn how to write Python code for doing LLM inference using popular APIs.

|

Text + Vision (VLM)

Give your locally running LLM an access to vision!

|

|

|

|

LLaVA

|

Different ways to run

LLaVa

vision/language model on Jetson for visual understanding.

|

|

Live LLaVA

|

Run multimodal models interactively on live video streams over a repeating set of prompts.

|

|

NanoVLM

|

Use mini vision/language models and the optimized multimodal pipeline for live streaming.

|

|

Llama 3.2 Vision

|

Run Meta's multimodal Llama-3.2-11B-Vision model on Orin with HuggingFace Transformers.

|

|

|

|

|

EfficientVIT

|

MIT Han Lab's

EfficientViT

, Multi-Scale Linear Attention for High-Resolution Dense Prediction

|

|

NanoOWL

|

OWL-ViT

optimized to run real-time on Jetson with NVIDIA TensorRT

|

|

NanoSAM

|

NanoSAM

, SAM model variant capable of running in real-time on Jetson

|

|

SAM

|

Meta's

SAM

, Segment Anything model

|

|

TAM

|

TAM

, Track-Anything model, is an interactive tool for video object tracking and segmentation

|

Image Generation

|

|

|

|

Cosmos

|

Cosmos is a world model development platform that consists of world foundation models, tokenizers and video processing pipeline to accelerate the development of Physical AI at Robotics & AV labs.

|

|

Genesis

|

Genesis is a physics platform designed for general-purpose Robotics/Embodied AI/Physical AI applications.

|

|

Flux + ComfyUI

|

Set up and run the ComfyUI with Flux model for image generation on Jetson Orin.

|

|

Stable Diffusion

|

Run AUTOMATIC1111's

stable-diffusion-webui

to generate images from prompts

|

|

SDXL

|

Ensemble pipeline consisting of a base model and refiner with enhanced image generation.

|

|

nerfstudio

|

Experience neural reconstruction and rendering with nerfstudio and onboard training.

|

Audio

RAG & Vector Database

|

|

|

|

NanoDB

|

Interactive demo to witness the impact of Vector Database that handles multimodal data

|

|

LlamaIndex

|

Realize RAG (Retrieval Augmented Generation) so that an LLM can work with your documents

|

|

LlamaIndex

|

Reference application for building your own local AI assistants using LLM, RAG, and VectorDB

|

API Integrations

About NVIDIA Jetson

Note

We are mainly targeting Jetson Orin generation devices for deploying the latest LLMs and generative AI models.

|

|

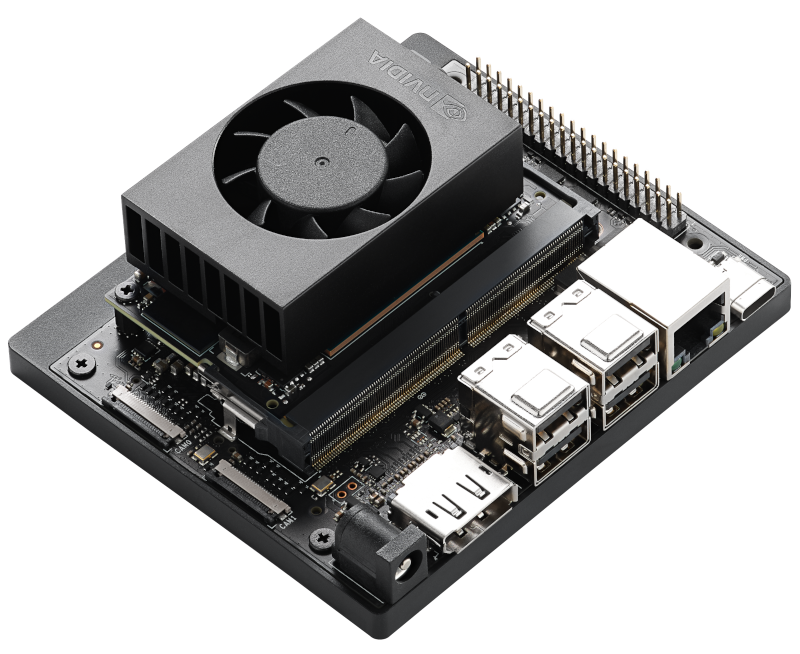

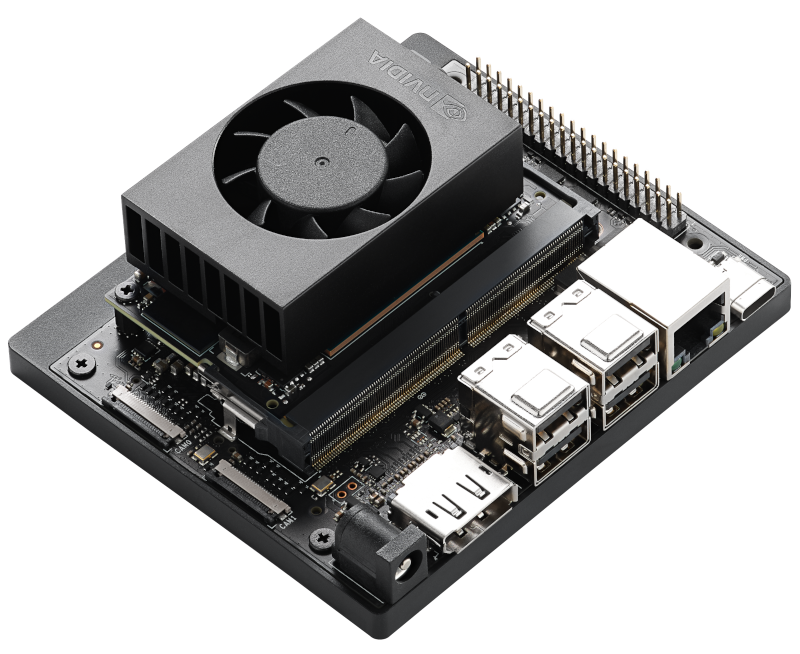

Jetson AGX Orin 64GB Developer Kit

|

Jetson AGX Orin Developer Kit

|

Jetson Orin Nano Developer Kit

|

|

|

|

|

|

|

GPU

|

2048-core NVIDIA Ampere architecture GPU with 64 Tensor Cores

|

1024-core NVIDIA Ampere architecture GPU with 32 Tensor Cores

|

|

RAM

(CPU+GPU)

|

64GB

|

32GB

|

8GB

|

|

Storage

|

64GB eMMC (+ NVMe SSD)

|

microSD card (+ NVMe SSD)

|

|