Tutorial - NanoOWL

Let's run NanoOWL , OWL-ViT optimized to run real-time on Jetson with NVIDIA TensorRT .

What you need

-

One of the following Jetson:

Jetson AGX Orin (64GB) Jetson AGX Orin (32GB) Jetson Orin NX (16GB) Jetson Orin Nano (8GB)

-

Running one of the following versions of JetPack :

JetPack 5 (L4T r35.x) JetPack 6 (L4T r36.x)

-

NVMe SSD highly recommended for storage speed and space

-

7.2 GBfor container image - Spaces for models

-

-

Clone and setup

jetson-containers:git clone https://github.com/dusty-nv/jetson-containers bash jetson-containers/install.sh

How to start

Use the

jetson-containers run

and

autotag

commands to automatically pull or build a compatible container image.

jetson-containers run --workdir /opt/nanoowl $(autotag nanoowl)

How to run the tree prediction (live camera) example

-

Ensure you have a camera device connected

ls /dev/video*If no video device is found, exit from the container and check if you can see a video device on the host side.

-

Install missing module.

pip install aiohttp -

Launch the demo

cd examples/tree_demo python3 tree_demo.py --camera 0 --resolution 640x480 \ ../../data/owl_image_encoder_patch32.engineOption Description Example --cameraTo specify camera index (corresponds to /dev/video*) when multiple cameras are connected1--resolutionTo specify the camera open resolution in the format {width}x{height}640x480Info

If it fails to find or load the TensorRT engine file, build the TensorRT engine for the OWL-ViT vision encoder on your Jetson device.

python3 -m nanoowl.build_image_encoder_engine \ data/owl_image_encoder_patch32.engine -

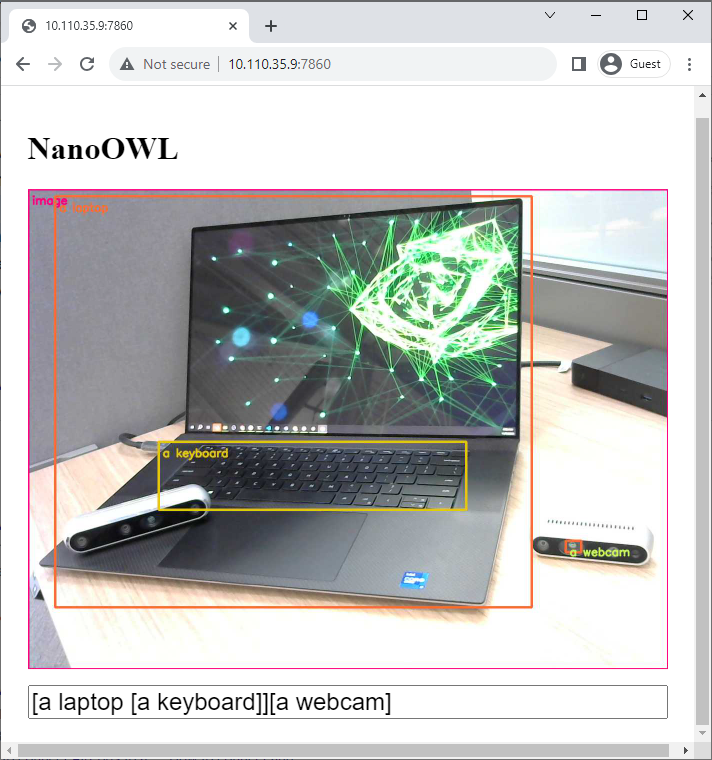

Second, open your browser to

http://<ip address>:7860 -

Type whatever prompt you like to see what works!

Here are some examples

-

Example:

[a face [a nose, an eye, a mouth]] -

Example:

[a face (interested, yawning / bored)] -

Example:

(indoors, outdoors)

-

Example:

Result